RESEARCH & PUBLICATION

RESEARCH & PUBLICATION

Our Research Focus

Responsible AI (RAI)

Ethics and safety are non-negotiable. Our RAI playbook and ‘human-in-command’ philosophy ensure every solution is fair, transparent, and respects user privacy from concept to deployment.

Generative AI

We’re leveraging the power of Generative AI (like in Krishi Saathi and soon, ORF) to create personalized content, automate complex tasks, and make information more accessible to those we serve.

Automatic Speech Recognition

Breaking language barriers. Our advanced Automatic Speech Recognition for diverse Indic languages is key to solutions like ORF and Agri AI Collect, enabling direct interaction and data capture through speech.

Computer Vision

From analyzing a newborn’s health via video (Shishu Maapan) to detecting crop pests or interpreting medical scans (LPA, X-Rays, DR), our computer vision research delivers critical insights.

Multimodal AI

We’re exploring how AI can understand and correlate different types of data—like audio, images, and text—to create even more accurate and powerful solutions, such as linking cough sounds with X-ray data for TB.

NLP (Natural Language Processing)

Our Natural Language Processing capabilities drive solutions that understand farmer queries in multiple languages (Kisan e-Mitra) and scan vast news sources for critical alerts (MDS, Krishi 24/7).

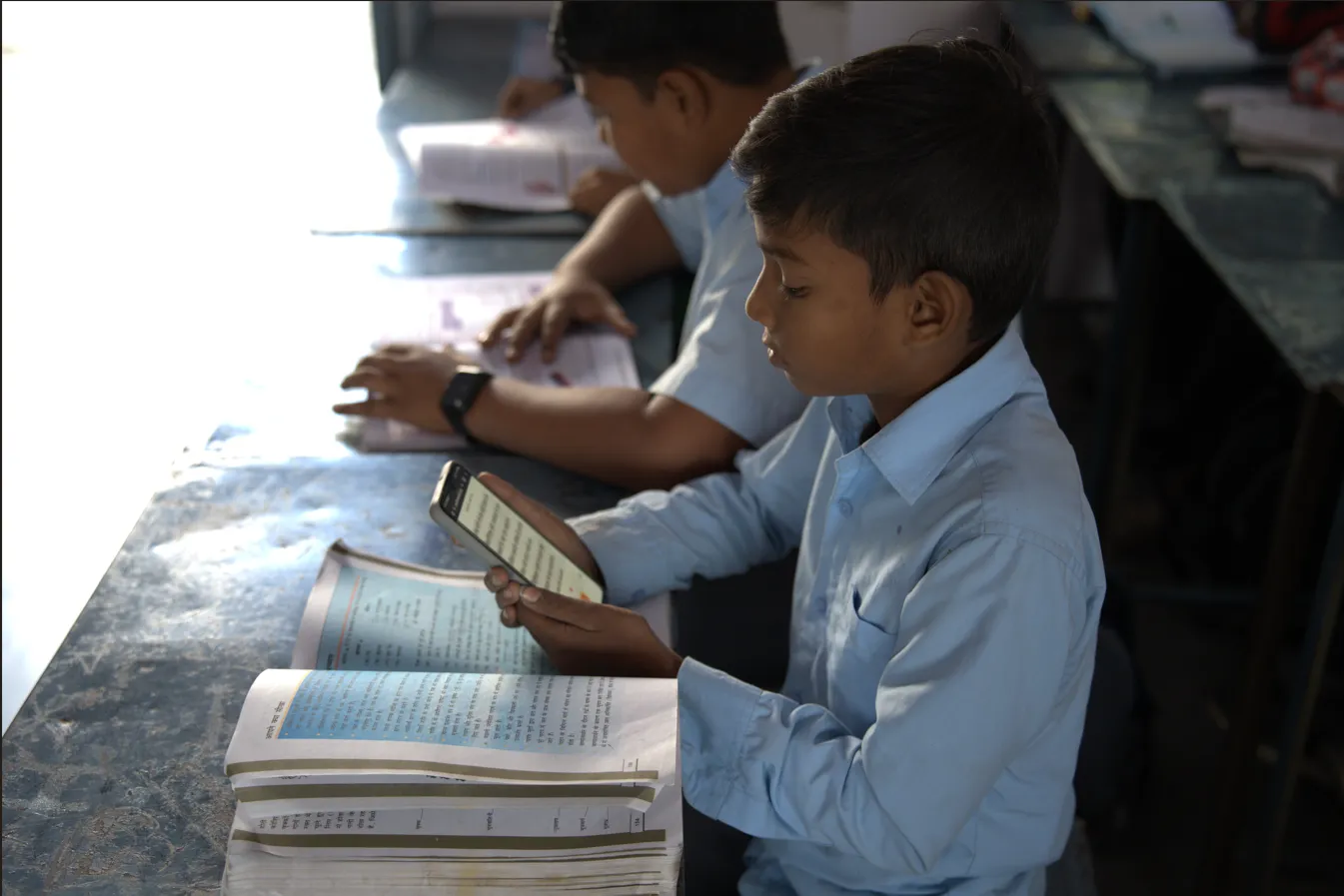

Edge & Low-Resource Solutions

Impact means accessibility. We focus on building AI that works efficiently on basic smartphones and in low-connectivity environments, ensuring our solutions reach the last mile.

Risk Stratification

Our risk stratification models (like in PATO for TB and PROS for maternal health) identify vulnerable individuals early, enabling proactive interventions that save lives.

PUBLICATIONS

Recognized Innovation

Data for Good

We believe in the power of data to drive social good. While always prioritizing. ethical collection, privacy, and security (as per our Responsible Data Use Policy), we leverage diverse datasets (from government sources like Ni-kshay, to data collected via our solutions like ORF voice samples) to build and refine impactful AI. We are committed to responsible data stewardship.